One of the main reasons one enters academia is to play a role in disseminating knowledge. My career as an academic coincided with revolutionary developments in technology culminating in the development (with my colleague Kevin Wayne) of a new model for disseminating knowledge. We have created an extensive amount of content (see the COURSES page here for access to it) and have been using it to reach millions of people worldwide.

A 21ST-CENTURY MODEL

FOR DISSEMINATING KNOWLEDGE

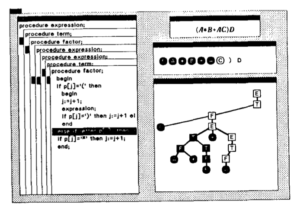

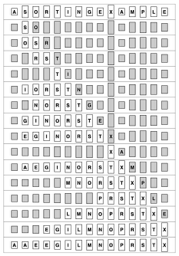

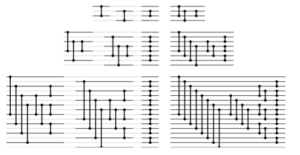

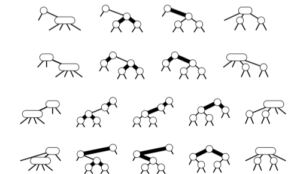

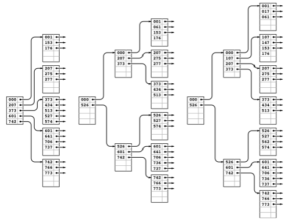

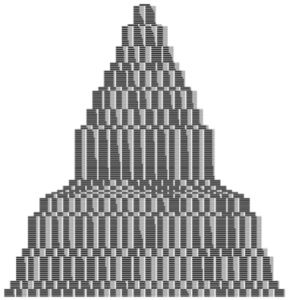

Textbooks. A central focus of my career since 1975 has been creating textbooks. People in my generation had the unique challenge of teaching without the benefit of the time-tested textbooks that our peers in other fields throughout the university were depending on. This turned into a unique opportunity—many of us were able to define the field by writing textbooks.

My books have sold over one million copies. On the basis of the experience of creating them, I know that a good textbook has the following features:

- Serves as guiding force and “ground truth” for students learning a core subject.

- Distills a lifetime of faculty experience for future generations.

- Provides a reference point for courses later in the curriculum.

These features still have substantial value, and textbooks are here to stay. Some people might prefer electronic versions, but a substantial number prefer physical textbooks (or both). One possible reason: Printed books enjoy the benefit of 500 years of development since Gutenberg while online content has only been around for several decades.

Booksites. Still, online content can vastly leverage printed material in at least the following ways:

- Provide readily accessible presence on the web.

- Allow for regular updates and extensions.

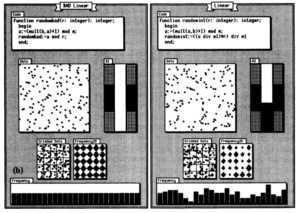

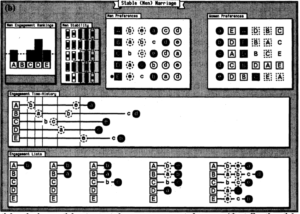

- Provide content types not feasible in print, such as production code, test data, and animations.

These features have substantial value–online content is here to stay.

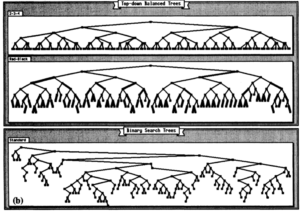

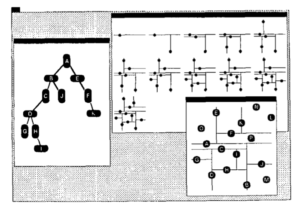

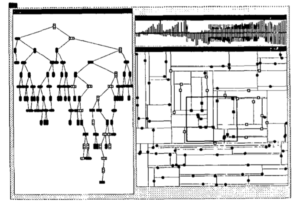

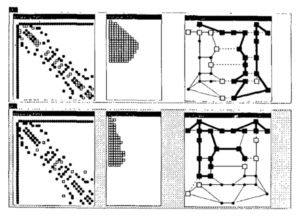

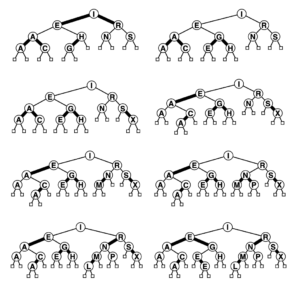

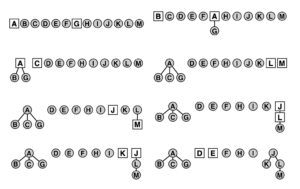

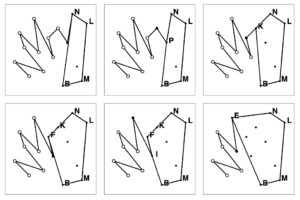

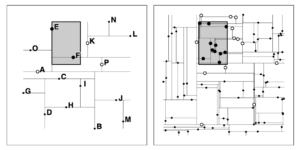

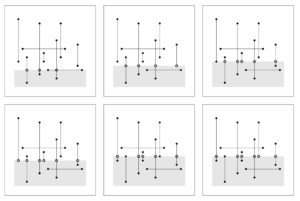

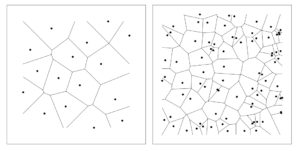

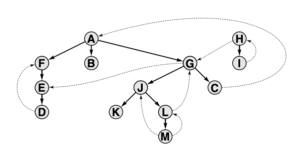

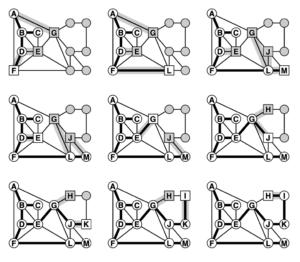

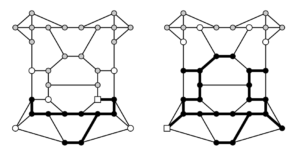

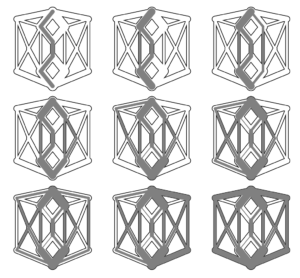

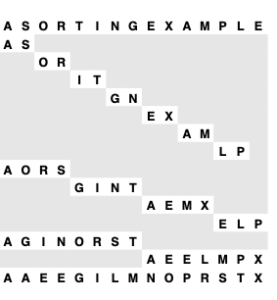

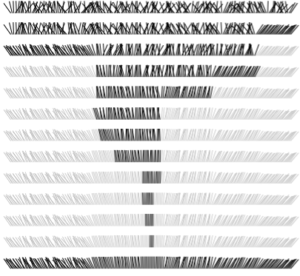

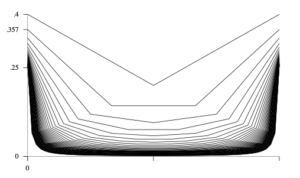

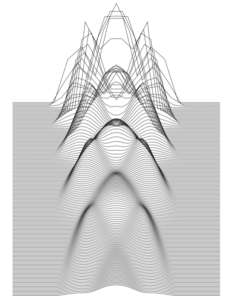

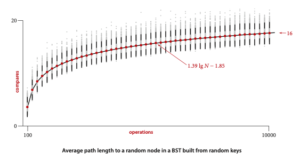

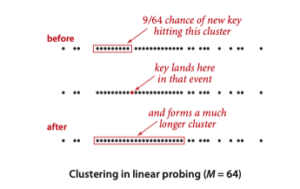

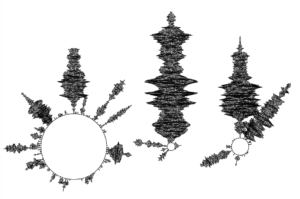

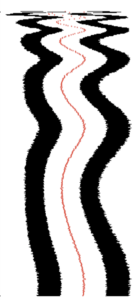

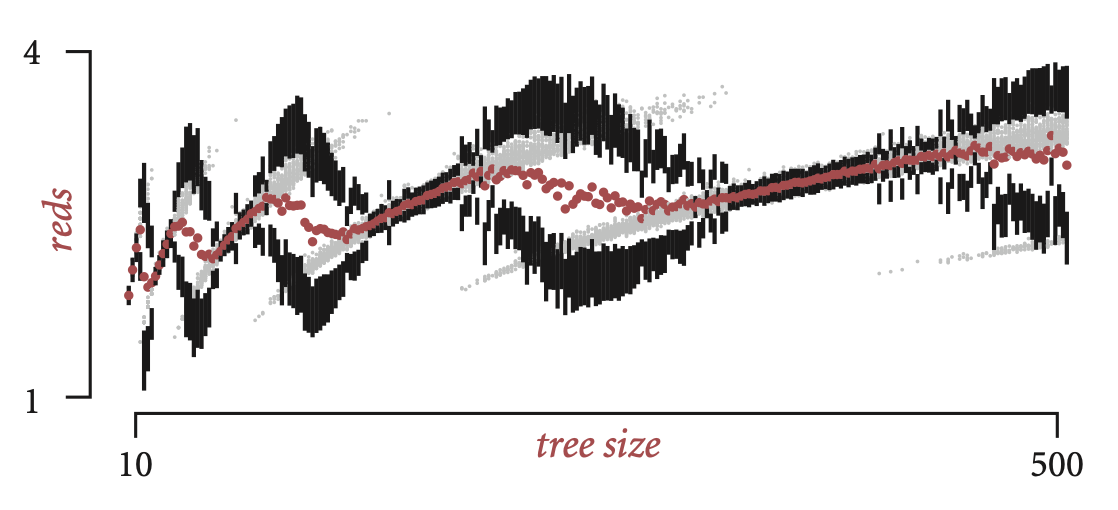

Why not just put the book online, as an e-book? Because it was not designed to be read online! For decades, I have been learning how to present the content on the printed page in the best possible light and how to create detailed figures that supplement the text (see the Visualization tab here). Yes, appealing presentation of content is also important for the booksite, but its main purpose is to facilitate searching, following links and scrolling on a variety of devices and to provide access to content types not possible on the printed page. Development of best practices for online content is still a work in progress (and a few centuries behind developments for the printed page).

Not long after he arrived at Princeton in 1998, Kevin Wayne conceived of the idea of organizing and developing the content for our introductory computer science course online, for immediate use by our students, but also in anticipation of using it for a book someday. The booksites that Kevin and I have developed over the next two decades now consist of tens of thousands of files and have attracted tens of millions of page views. They are unique and valuable resources. A good way to understand what they can contribute is to spend some time browsing through them.

Computer Science: An interdisciplinary approach

Algorithms (4th edition)

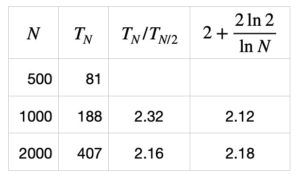

Analysis of Algorithms

Analytic Combinatorics

Lecture Videos. Even more ubiquitous than a textbook is the idea of a lecture. Faculty and students regularly gather together, often in a large lecture hall, for the lecturer to present material to the students so that they may learn it. This model has been an essential part of education for a very long time because it

- Allows instructors to communicate directly with students.

- Stimulates the development of a “community of scholars”.

- Encourages great teachers to inspire large groups of students.

These features have substantial value, and lectures are here to stay.

But it is not necessary for everyone to gather at the same place and time. It has long been recognized that large live lectures have substantial drawbacks because they

- Require significant time and effort for preparation, which is largely duplicated by instructors around the world.

- Place students in a passive role, just listening to the lecture (and maybe taking notes).

- Move at one fixed pace, which cannot be the right one for all students. Most students in a large live lecture are absent, lost, or bored.

Despite these obvious and well-researched drawbacks, large live lectures are the overwhelmingly predominant method of instruction in US universities and many other educational institutions.

By contrast, making well-produced videos of lectures available to students encourages them to

- Actively choose the time and place they learn. No one is absent.

- Actively choose their own pace. Students can speed up the video if bored or slow it down if lost.

- Review the lecture at any time. Students can review material until they understand it, and refer back to it for exams.

Once one has experienced the ability to consume lectures when and where one wants, going back to having to be at a particular time and place to hear a lecture seems antiquated. It’s like the difference between having to watch Seinfeld at 9PM on Thursday nights and binge-watching episodes on a whim.

It is true that lectures have to be well produced. I spent 50-100 hours of preparation for each lecture and needed skill in using Unix, emacs, OS X, Dropbox, Illustrator, Keynote, TeX, HTML, MathJax, Mathematica, PostScript, and a dozen other tools I can’t even name. But it was less time-consuming than writing a book.

The most important development for the future, which is just starting to take hold, is that faculty will adopt online lectures prepared elsewhere instead of preparing and delivering their own, freeing up time for them to decide what material they want their students to learn, to appropriately assess their progress, and to help them succeed.

Online services. In recent years, there has been explosion of research and development in educational technology. I have only been peripherally involved, but it is appropriate to mention here some of the tools that we have been using:

- Websites built with HTML that provide all course information for students.

- Tigerfile for managing programming assignment submissions.

- codePost for grading programming assignments.

- Zoom for class and precept meetings and office hours.

- Gradescope for online exams.

- CUbits to curate, serve, and monetize lectures.

- Quizzera for online quizzes.

- Coursera for free online MOOCs.

- Safari for access to content in a corporate setting.

- Ed for student Q&A and peer discussion.

- E-mail for authoritative communication from faculty to students.

Taken together, such tools comprise a fourth component of our model as they have allowed us to effectively scale our offerings to reach large numbers of students. The suite of available tools is constantly evolving and remains an exciting area of research and development. Their true potential is yet to be realized.

HISTORICAL PERSPECTIVE

I could write a book on this topic, but I’ve written enough books, and it’s not really my area of research expertise. Instead, my intent is to provide context that can help explain the evolution of my thinking on these matters. Much of my thinking stems from a series of invited talks that I gave around the world in the years before the pandemic.

Origins. For more than a century, faculty at colleges and universities would disseminate knowledge by writing papers and books, delivering large live lectures, and leading small groups of students in discussion or problem-solving sessions. In the 1970s, things began to change.

Desktop publishing. My thesis was written out by hand and typed by a secretary, as were my early papers. The process could take over a year. In the span of a decade (the 1970s, roughly) the infrastructure that we enjoy today emerged, where dissemination can be achieved in an instant. I was fortunate to have the opportunity to play a role in this revolution, first at Xerox PARC and later at Adobe. It is hard to overstate the impact of experiencing these changes as an academic. We could write detailed class notes and assignments instead of writing problems on the board in class, and the time between finishing a paper or a book and publishing it shrank from a year or more to a month or less. More important for me was the fact that the entire creative process was in my hands, not left to a secretary or a printer. This aspect of desktop publishing was controversial to some. Didn’t faculty have more important things to do than to worry about fonts and the like? Maybe, but a surprising number of talented computer scientists, from Knuth and Kernighan to Simonyi and Warnock, dedicated themselves to advancing this art, and we are all in their debt. Personally, I spent much of the 1980s and 1990s using state-of-the-art digital publishing tools to create my Algorithms books.

A dream realized. By the turn of the century, it was clear that the internet and the personal computer were going to play a dominant role in the dissemination of knowledge. But the idea had been envisioned half a century earlier, by Vannevar Bush. In 1997, I delivered a speech to Princeton’s first-year class telling that story. This speech is still remarkably relevant today. When Google revolutionized the internet a year later, the last piece of Bush’s vision was realized. We could imagine having access to the world’s knowledge on a personal device, we could disseminate knowledge just by posting it, and anyone could have access to it.

But how, exactly? In the early years, everyone was burned by the experience of advancing technology rendering content obsolete, even lost. A new version of a text editor, or a new editor, or a new company might mean that my online contribution to knowledge would disappear. That did not happen with paper. I can still find my mother’s PhD thesis, written nearly a century ago, at the Brown library. Whatever editor or typesetting system or markup language you are using, are you sure what you are writing now will be readily available in a century? I’m not, and I consider this problem to still be open. In late 1997, I wrote a short letter to the New Yorker making this very point. Not much has changed since, really.

Booksites. As the internet came into daily use in the 1990s, first thing that faculty did was to post assignments, deadlines, exam dates and the like on course websites, a much more convenient way to handle such information than writing it on the blackboard or preparing paper handouts. Naturally, people started putting more and more content on these sites, too. But much of this content seemed specific to the course and ephemeral, when some of it was destined for a textbook.

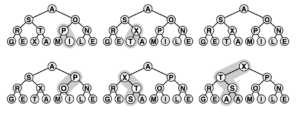

When Kevin Wayne began working with me in 1998, he started putting significant amounts of content on a webpage, separate from any course offering. For nearly a decade, we used this page as a development laboratory for our new textbook. Everything went on the web, but we could cherrypick the information there while creating the coherent story needed for a textbook. Once the book was published, the page became a valuable resource for readers, providing all kinds of content that unsuitable for a book. Thus was born the concept of a booksite. It was so successful that we created another one for Algorithms, which significantly increased the appeal of that book, even though it was already a bestseller. Then I did the same for Analysis of Algorithms and Analytic Combinatorics, making them much more accessible to many more people than the books alone could be.

Our booksites now have tens of thousands of pages that draw millions of unique visitors each year and tens of millions of pageviews.

MOOCs. Should we include videos of some sort in our booksites? It is a natural idea, but it just never seemed worth the effort. Things changed in 2012 when Daphne Kollar and Andrew Ng formed Coursera and encouraged educational institutions to vastly expand their reach by putting lecture videos online. Having just revamped our lecture slides for the fifth or sixth time, Kevin and I realized immediately that they would be perfectly suited to doing this, and we produced Algorithms for the Coursera platform. It was immediately successful and has already reached over 1 million people.

Over the next several years, I recorded over a hundred hours of videos that are the basis for six Coursera courses. For me, the appeal of these courses is that they make the content accessible to a huge number of people whoo otherwise would not have access to them. I’ve always been uneasy about the model of issuing certificates or otherwise trying to compete with existing educational institutions. My preference is to develop materials that can enable any of the millions of teachers around the world do their job more effectively and efficiently. That is what the “21st century model” is all about. Our experience is that many faculty are reluctant to have their students take a MOOC, but we are often able to convince people that watching videos in conjunction with the booksite and textbook can free time for them to work on curating the material, developing assessments, and helping their students succeed.

Moving my Princeton courses online. After recording the last lecture in our introductory course Computer Science in the early summer of 2015, I was exhausted and thought to myself that it would be very difficult for me to deliver that lecture again in the classroom. I had spent many, many hours preparing the slides for that lecture (and all the others). One of the most important aspects of the lectures was that the lecture slides were very detailed, but the information on a lecture slide would appear gradually, controlled by a remote in my hand. Creating and checking these “builds” was very time-consuming but also excellent preparation for the lecture. I didn’t need any rehearsal, as I knew what was going to appear on the screen each time I advanced the remote, and just talked about it. One of the last slides in the last lecture was a simulation of a CPU circuit, with over a hundred builds. To deliver that lecture again in a year, I would need to revisit all the decisions I made in sequencing those builds—I knew that would be a difficult and time-consuming job. And why do it? I couldn’t do as good a job as just captured in the video. And if I couldn’t do it, no one else could either.

I knew then that the days of me or someone else reading my slides to their students in a large lecture hall were over. In September 2015, I delivered a “last live lecture” where I explained the change to the students, and haven’t looked back.

The pandemic. The full story remains to be written, but having moved my lectures online, the spring of 2019 went relatively smoothly in my courses. I promised the students that we would provide them with an experience comparable to that experienced by the thousands of students who have taken it in the past. I think that having the lectures online provided our course staff with the stability and breathing room needed to develop new ways of interacting with students and we were able to approach that ideal, despite the circumstances. My teaching ratings were among the highest I have ever earned. Moreover, plenty of students and faculty at other institutions were able to take advantage of our materials to help with their transition of online teaching.

THE FUTURE

I am often asked how the pandemic has affected my teaching. The answer is that the most important effect has been that no one hassles me about not having in-person meetings with students! My opinion is that the new ways of teaching that embrace technology are so much more effective and efficient than the old ones that do not.

Sure, there are plenty of situations where in-person instruction is valuable. Most teachers are drawn to the profession by the pleasure of the experience of kindling the light of understanding in a student or a group of students. But insisting that all instruction be done this way and only this way is counterproductive.

Rather than mapping out strategies to get back to old ineffective and inefficient teaching methods, we should be brainstorming ways in which we reach out to more students than ever before and taking them farther than ever before. To conclude, I’ll offer some comments on the way I do things now, in an attempt to illustrate what is possible.

Lectures. Personally, I cannot conceive of building a course based on large live lectures again. Many people have created quality video content during the pandemic and are seeing the benefits of using it. Yes, good online lectures are difficult to produce, but the investment produces a much better experience for students and is certainly worthwhile when amortized over future and expanded usage.

E-mail. This old-school technology is effective for synchronizing a course. I send an e-mail to all officially registered students each week describing their responsibilities for the week. In my experience, students take such e-mails seriously.

Personal interaction. When I was teaching in a lecture hall with 400+ students, I certainly had little personal interaction with students. Now, when I walk across campus or attend a large conference, I am often approached by a student who seems to know me. Watching lectures regularly in a private setting gives each student a sense of a personal relationship with me.

Grading. Anyone not using online tools for grading needs to rethink what they are doing. In our first-year classes, we grade hundreds of student programs in a matter of hours each week, with high standards for personal feedback and fairness. In my advanced class, I grade dozens of advanced mathematical derivations in well under an hour—speaking of personal interaction, I get to know those students relatively well. And in both classes, I can do assessments that are auto-graded. Zero time spent grading is tough to beat. And these experiences only scratch the surface, with sea-assessments and crowdsourcing already proven to be effective.

Discussion forums. Online discussion forums have replaced office hours, having proven extremely effective and efficient in helping us answer student questions. It took some experience for us to guide students in using this resource effectively, but it is now indispensible.

AND THIS is all from the perspective of decades of clinging to old ways and having to be convinced to adopt new approaches. As ever, the next generation, unburdened, will take us places we cannot even imagine today.

Professors Behind the MOOC Hype (Chronicle of Higher Education)

A 21st Century Model for Disseminating Knowledge (RS invited lecture)

A 21st Century Model for Disseminating Knowledge (video of MIT lecture)